Zelda’s sound rendering mechanism

Game audio has come a long way from the days of Pac-man. Those original games could manage some beeps and bloops from a single channel speaker. As time went on, sampled sounds and stereo allowed for more realistic material sounds and music. Then was the introduction of surround sound systems and directional effects.

Like most modern games, The Legend of Zelda: Breath of the Wild relied on a standard library of sound effects blended into the gameplay. But this would not work for The Legend of Zelda: Tears of the Kingdom. It needed a much more complex system to meld sounds together and interact anywhere in the game world. It would simply become to much work to simulate an arrow shot in all the different environments found in the game. Caves need to echo, desert sands absorb sound, and what if the arrow lands in water, or ice, or on stone? Further, all of this must be attenuated based on distance and obstructions so players can tell where things are coming from and how far away they are.

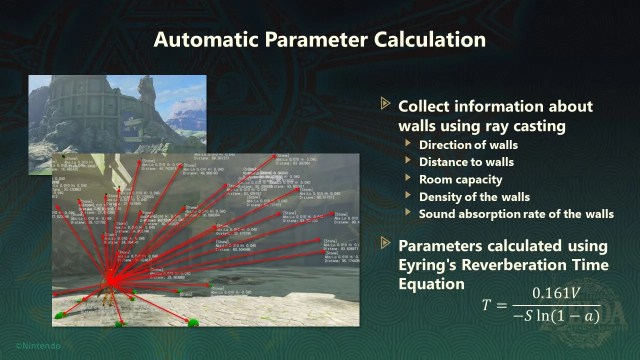

Junya Osada, the lead audio engineer for The Legend of Zelda: Tears of the Kingdom, started sharing the mechanisms of how they achieved this. They use a variety of specialized filters that were attached to informational voxel geometry under the map used to describe the environmental characteristics above it. Those voxels were used for game mechanics – and were co-oped to also help the sound. This environmental information is then combined with the players distance and direction from the sounds using their unique method to create some interesting emergent properties.

The system isn’t a full-fledged sound rendering system that has been explored before, but it’s a very interesting halfway ground from what we have today to such a system. It’s definitely worth a listen (which happens after the equally interesting talk about the physics engine):