Database Normalization

I had forgotten the rules for the levels of database normalization. Not surprising since I last had the class over 20 years ago. Those problem sets immediately came back to me.

I had forgotten the rules for the levels of database normalization. Not surprising since I last had the class over 20 years ago. Those problem sets immediately came back to me.

Dylan Browne demonstrates a 321 billion-polygon forest on UE5 (77,376 instances of 20 million poly trees). Nanite Foliage leverages a voxel-based method to achieve dense forests. The Witcher 4 uses it and it about to be debut in the upcoming release of Unreal Engine 5.

He also did a fascinating ray-traced translucency experiment

Links:

Dezeen did a good report on 10 different architectural installations at Burning man 2024

They also did one for Burning Man 2025

The 90’s were an amazing time to learn to code. Especially in Europe, hundreds and even thousands of people would gather for weekend-long, round-the-clock caffeine fueled coding sessions to flex their latest graphics programming tricks on Amigas, Commodores, PC’s, and other hardware.

Imphobia was the leading PC demoscene diskmag of the first half of the 1990s. Founded in 1992, it issued until 1996. In that period, 12 issues were released.

Early issues of Imphobia run in DOSBox except issues 6 and beyond where the graphics are not displayed correctly, probably because of the use of an obscure video mode. Nevertheless it’s possible to read the articles. All Imphobia issues are available at scene.org and can be seen at Demozoo.

AMD researchers have published a VRAM-saving technique that leverages procedural generation techniques to eliminate the need for sending the GPU 3D geometry altogether. The GPU utilizes work graphs and mesh nodes to produce 3D-rendered trees on the fly at the LOD (Level of Detail) required for the current frame.

Instead of requiring massive amounts of geometry, the only thing transferred is the code needed to generate the trees in the scene – code that is only a few kilobytes instead of megabytes or even gigabytes.

Read the paper here.

A lot of folks don’t understand how to use hiking poles on ascent/descent to their fullest potential. Those straps are more than just for looking good or to avoid dropping them – they’re for leverage and to help pull yourself up hills and ease yourself down descents. You can hike with your arms as much as your legs and save your knees.

Game audio has come a long way from the days of Pac-man. Those original games could manage some beeps and bloops from a single channel speaker. As time went on, sampled sounds and stereo allowed for more realistic material sounds and music. Then was the introduction of surround sound systems and directional effects.

Like most modern games, The Legend of Zelda: Breath of the Wild relied on a standard library of sound effects blended into the gameplay. But this would not work for The Legend of Zelda: Tears of the Kingdom. It needed a much more complex system to meld sounds together and interact anywhere in the game world. It would simply become to much work to simulate an arrow shot in all the different environments found in the game. Caves need to echo, desert sands absorb sound, and what if the arrow lands in water, or ice, or on stone? Further, all of this must be attenuated based on distance and obstructions so players can tell where things are coming from and how far away they are.

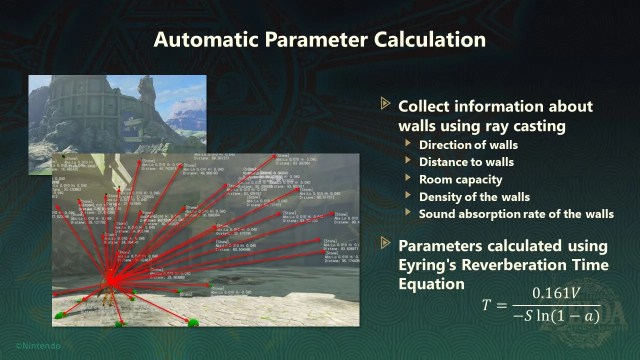

Junya Osada, the lead audio engineer for The Legend of Zelda: Tears of the Kingdom, started sharing the mechanisms of how they achieved this. They use a variety of specialized filters that were attached to informational voxel geometry under the map used to describe the environmental characteristics above it. Those voxels were used for game mechanics – and were co-oped to also help the sound. This environmental information is then combined with the players distance and direction from the sounds using their unique method to create some interesting emergent properties.

The system isn’t a full-fledged sound rendering system that has been explored before, but it’s a very interesting halfway ground from what we have today to such a system. It’s definitely worth a listen (which happens after the equally interesting talk about the physics engine):

Google ended the Hot Chips 2025 machine learning session with a detailed look at its newest tensor processing unit, Ironwood. First revealed at Google Cloud Next 25 in April 2025, Ironwood is Google’s first TPU (Tensor Processing Unit) designed primarily for large scale inference workloads – and it’s a whopper.

The architecture is incredible. It delivers 4,614 TFLOPs of FP8 performance – and eight stacks of HBM3e provide 192GB of memory capacity per chip and is paired with 7.3TB/s bandwidth. With 1.2TBps of I/O bandwidth, the system can scale up to 9,216 chips per pod without glue logic and reach a whopping 42.5 exaflops of performance. It absolutely trounces their previous TPUs.

Deployment is already underway at hyperscale in Google Cloud data centers, although the TPU remains an internal platform not available directly to customers.

Links:

Demozoo.org is a website that is a library of not only old school ’90’s era demo competition submission – but even all the recent ones as well. They have lists of current competitions and news too. An extra feature is many demos have youtube videos of the runs so you don’t have to download the binaries and run them locally.

Nightingale game developers did something many other applications do – they gathered early feedback and used it to help improve the product. What’s particularly interesting is all the different methods and combinations Nightingale developers used.

As you might expect, some of the feedback was simply changes to the balance of certain mechanics if someone felt something was too hard or a mechanic simply wasn’t being understood. But the team went beyond just that by pairing direct feedback with telemetry they were gathering.

Instead of a standard Discord channel that required managers to sift through buckets of messages to collect the few jewels, they let users make suggestions and let people crowdsource and advocate issues to see if others felt the same way. This alone helped get clearer signal over the noise. The devs went further and compared that to the metrics they gathered to see if it was true. They would track things like:

When the metrics were compared and combined with the active vote topics in Discord, they were able to combine quantitative analytics to the qualitative Discord feedback. If there seemed to be a point large numbers of people stopped playing, the devs could go to the forums to ask why and get qualitative feedback. This helped not only identify problems – but also get information, or even suggestions, as to how to correct them.

The idea is not new. Others have tried using metrics – and nobody probably more than the gamification methods in Duolingo. I think there is a point at which you go too far; so it’s definitely worth watching and drawing some conclusions.